With the release of GPT-4 we have seen a significant increase in related tooling, and an API key is one of the best ways for you to take advantage of the latest developments.

It’s not that OpenAI is the only game in town, it’s that ChatGPT has captured the attention of the masses. In time that may change, but it’s currently the case so let’s dive in. In the past it has been common for only developers to need API keys, but in today’s article I’ll explain why having an OpenAI API key may be a good idea for you.

In case you are new to API keys I’ll also give you a brief introduction so you can have a basic understanding of what they are and how they work. In addition to the benefits I will also cover some risks and other considerations.

Do You Really Need an API Key?

Although ChatGPT Plus subscription has benefits,1 there is perhaps no better way to get in on the latest action than with an API key. The main reason for this is that you can easily use a wealth of open source tooling that others have created. If you’re a non-technical user there are still reasons to consider it, but you’ll have to make the call yourself.

ChatGPT Plus is a “Monthly Subscription” which is a site you interact with. The API keys are “Pay per Use” and give you more advanced web based functionality as well as programmatic access. Power users may be getting a better deal through ChatGPT Plus, and light users may be overpaying. If you’re not at either end of the spectrum then the API is probably the better deal, if for no other reason than your ability to use the Playground. Don’t get too hung up on the money because I expect pricing will change, but there are more considerations than just the cost.

These APIs are effectively general services. You’ve never before been able to accomplish so much for so little; the current pay-per-use cost it is a small price to pay for what you can do through the API. Previously it was not uncommon for a developer to pay for API access and then provide a service to the clients. However, in this case it makes sense for individuals to consider direct API access. There are two main reasons:

The Playground - for advanced prompting

API Access - for programmatic use

Value-added Service Providers - for many other cool things

Let’s talk about each of these things in more detail

The Playground

The Playground is very similar to ChatGPT Plus, except you can do more with it.

If you are going beyond the most basic interactions you will be able to take advantage of this added functionality. Whether or not you use the extra capabilities, light users might save some money compared to ChatGPT Plus since it is pay-per-use.

In order to gain access to the playground you will have to sign up for a paid OpenAI account. Note that this is also the same account that gives you API access.

This won’t be a Playground tutorial, but I do want to give you a quick intro so you can have a ChatGPT Plus like experience. Let’s use GPT 3.5 turbo as an example.

SYSTEM (role)

This is the context that you provide. The idea seems to be a prefix to suggest what it is and how it is to behave.

USER/ASSISTANT (roles)

This is your prompt; e.g., what you would type into the text box in ChatGPT.

These can also be strung together with multiple prompt/completion (user/assistant) calls as a way of providing a few-shot setup for your prompt.

I suspect that your interactions with the UI are provided to the API using these roles.

MODE

In this case we want to mimic what we would experience in the ChatGPT UI, so we’re going to select “Chat”

MODEL

“gpt-3.5-turbo” is closest to the current model.

NOTE: While ChatGPT Plus gives you gpt-4 access through the UI, you will have to join the waitlist for gpt-4 API access. This will also give you access to the much larger 32k model.

TEMPERATURE

Higher temp = more randomness in the response.

They say the default for the UI is 0.7 - 0.8 because that will give generally acceptable responses. You can experiment to get the types of responses you are looking for (more creative or predictable).

MAXIMUM LENGTH

How long the response will be. The API is priced per 1k tokens including responses.

TOP P

A different method of controlling randomness, like temperature.

FREQUENCY PENALTY

To limit the use of repeat words from dominating the response. Useful if the model is repeating itself.

PRESENCE PENALTY

Another mechanism for encouraging randomness by penalizing the use of words which already exist.

API Access

The playground is effectively a UI for the API. Once you have a paid account you can create API keys here: https://platform.openai.com/account/api-keys.

I won’t go too far into API use because the API documentation and model documentation are very good. You can also find some solid examples on the OpenAI GitHub, but here are some quick thoughts.

If you are looking at older references you might discover a difference between expected formats. This is likely because GPT3 and earlier lumps everything into one big prompt, and GPT4 separates the context from the prompt into SYSTEM/USER/ASSISTANT roles (the Playground is helpful in visualizing this).

Because these models are pay-per-use and tokens must be burned for each prompt, the API can become expensive when providing heavy prompt context. For example, a full-contextualized prompt for the 32k gpt-4 model can cost nearly $2 per API call!

You’re still getting a lot out of this service so you may find that it’s worth it for your use case, but it is something to be aware of. If you're repeatedly providing the same context you may find that it is more appropriate to fine-tune your own models.2

Value-Added Services

The OpenAI API endpoints and Playground may be enough for you to consider a paid account, but the real unlock comes in the form of open source tooling that other people have figured out for you. Many of these are open source and available for free.

I want to preface this by saying I am hesitant to provide specific examples because:

I don’t want to specifically endorse anything here

I don’t know enough about the parties behind each solution to know if they are trustworthy

There are so many cool things out there that I don’t want to get stuck on any one solution

That said, it’s hard to describe the benefits without highlighting some utilities which allow you to do more with your API key. Here are just a few which have come up recently (note that there are THOUSANDS of similar utilities currently available).

Flux

Flux uses a concept called a “conversation tree” which is a way to visualize multiple responses. Instead of the linear Prompt/Completion that you get with ChatGPT, Flux allows you to trial a number of responses side-by-side and then continue pursuing the ones you like best.

Agent GPT

AgentGPT allows you to “Assemble, configure, and deploy autonomous AI Agents in your browser.” Or to say it another way, you can set a goal, let AI figure out how to accomplish the goal, and allow the AI to execute so it can actually achieve your goal.

AutoGPT

https://github.com/Significant-Gravitas/Auto-GPT

For the the more tech savvy there are a variety of powerful autonomous AI Agents which you can run locally and configure yourself. One such example is AutoGPT.3

Don’t limit yourself to these solutions… there are many more!

Downsides, Risks, & Other Considerations

API keys are a secret! You will need to be sure you are only sharing keys with services that you trust. Helping you identify trustworthy services is well beyond the scope of this article, but be sure to practice due diligence in sharing API keys; Anyone who has your API keys can use your account on your behalf, so you are effectively authorizing them to spend money on your account.

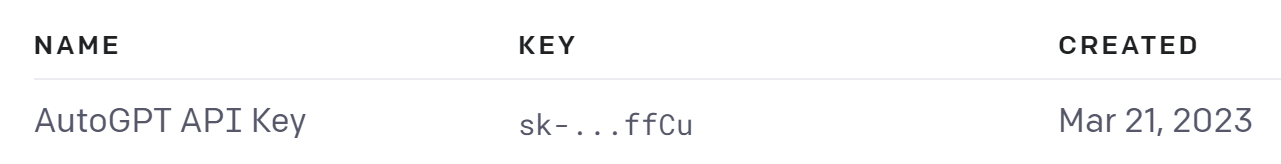

Create one API Key per service and Label your API keys so that you can easily keep track of what each key is used for.

Set usage limits and warning limits for your account. Doing this will ensure that you, the services you authorize, and any abuse of your API keys does not result in surprise bills. You can set usage limits at: https://platform.openai.com/account/billing/limits

Rotate your API keys to reduce the potential for theft and abuse. You don’t know how secure the services are and how they are storing your keys. It is a wise habit to delete and recreate your API keys at regular intervals, say once per month. This reduces the likelihood that your keys will be stolen or abused.

Delete old and unused API keys. Take a look at how often you use keys, and for once that you no longer use delete them to prevent misuse and abuse.

Understand retention and use policies for any third party services. OpenAI says they will keep your searches for 30 days, won’t share that data, and won’t use it for model training, but that may not be true for other services that you use.

The Takeaway

GPT-4 has led to a significant increase in related tooling and you can use API keys to access and benefit from these developments.

While ChatGPT Plus offers a convenient and easy-to-use interface, the API key provides more advanced functionality and a wealth of third-party tools and services. The Playground, API access, and value-added service providers can greatly enhance your experience. However, it's essential to be cautious when sharing your API keys, set appropriate usage limits, and be mindful of potential risks and data retention policies of third-party services.

As this type of approach is becoming more popular, you’ll want to keep your eyes open for new opportunities as there is a lot more on the horizon. By carefully considering these risk factors, you can unlock the full potential of GPT-4 and its associated tools, creating a more enriching experience with AI technologies.

In an earlier article I describe cost considerations for ChatGPT Plus vs. the API. The article also covers context which is helpful if you want to cost optimize your prompts.

https://brainwavelabs.substack.com/i/115077996/cost-considerations